Spoiler: My Job Is Probably Safe... For Now

A few years ago, I did something mildly masochistic: I spent weeks torturing AI image generators with medical illustration prompts to see if they could produce anything remotely usable. The results were... let's call them "educational." I documented the carnage in a series of blog posts that somehow racked up over 500,000 reads, which tells me two things: (1) a lot of people are curious about this topic, and (2) a lot of those people were desperately hoping I'd discovered the secret to making AI spit out medically accurate images so they wouldn't have to hire actual medical illustrators.

To the dismay of the many who contacted me, the results were far from medically accurate.

Back then, AI image generators could make some visually interesting stuff, if your definition of "interesting" includes six-fingered surgeons, anatomically impossible spines, and heart valves that looked like they were designed by someone who'd only ever seen a heart emoji. The tools were fun for concepting and mood boards, but if you needed something a surgeon could actually use in a presentation without getting laughed out of the OR? Not happening.

But that was 2024. It's now 2026, and the AI hype cycle has completed several more rotations. Every other week, someone announces a "breakthrough" model that's supposedly going to revolutionize visual content creation. So I figured it was time to run the experiment again. Not because I enjoy testing whether robots are gunning for my job (though the existential dread does keep things spicy), but because a lot of people keep asking: Has AI gotten good enough yet?

The answer, as you'll see, is nuanced. Which is a polite way of saying "mostly no, but with some interesting exceptions."

Why This Matters (And Why I'm Doing This To Myself)

Here's the thing: Ghost Medical isn't some random graphics shop dabbling in medical content. We make anatomically correct, functionally accurate, clinically defensible medical illustrations for surgeons, engineers, regulatory teams, and medical device companies. Our clients don't care if something "looks medical." They care if it's correct. If a valve doesn't seat properly in an annulus, if a tendon repair construct can't actually bear load, if cellular mechanisms are anatomically nonsensical, the image is worthless. Worse than worthless, actually. It's a liability.

So when I test AI image generators, I'm not looking for "cool medical vibes." I'm asking: Could this pass clinical review? Could a surgeon use this in a training deck? Could a regulatory team submit this to the FDA without getting roasted?

That's a much higher bar than "wow, that kinda looks like a heart."

The Lineup: Who's Fighting For Their AI Lives Today

I'm testing seven generators, plus one human baseline (because someone has to remind these bots what "correct" actually looks like). Here's the roster:

The Rules: No Cheating, No Excuses

Before anyone accuses us of moving goalposts, here's how this test works:

Every generator gets the exact same prompts. No prompt tuning. No retries. No "well, it did better on the third attempt." One shot. Same instructions. Same expectations.

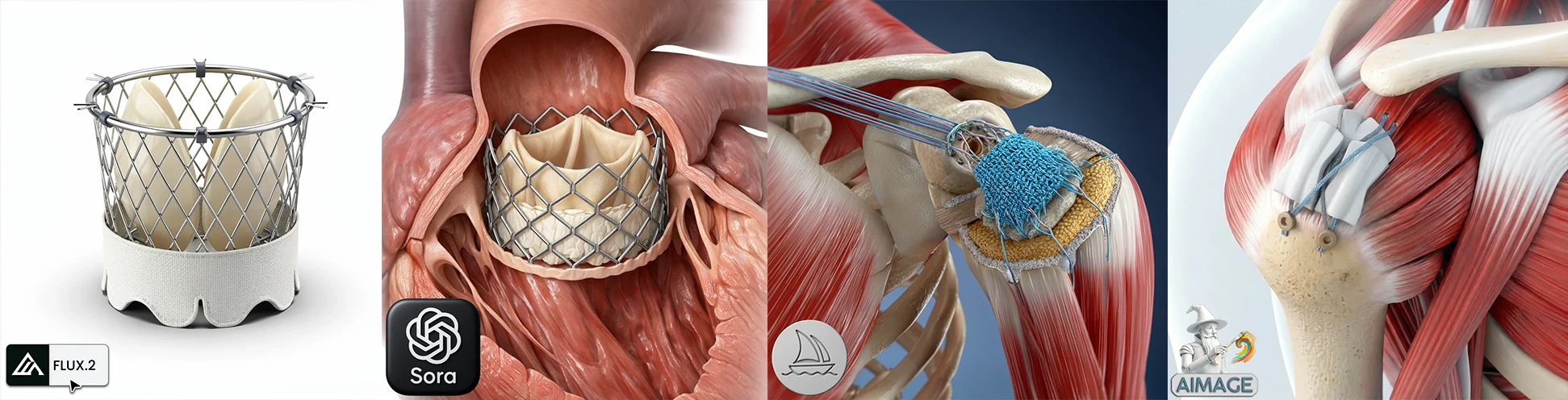

Each system was asked to produce five images:

- A stand-alone medical device (transcatheter aortic valve)

- The same device deployed inside heart anatomy

- An orthopedic tendon repair construct on a humeral head

- An IV catheter with labeled callouts

- A microcellular mechanism-of-action diagram showing healing over time

Every AI result gets compared against a real Ghost-produced medical illustration, the kind our clients actually pay for and use in FDA submissions, surgical training, and investor decks.

The grading criteria are simple and unforgiving:

- Prompt adherence - Did it follow instructions, or did it freestyle?

- Callouts and labels - Spelled correctly? Placed logically? Or gibberish?

- Medical accuracy - Anatomically correct, or anatomically "meh"?

- Conceptual accuracy - Does the construct make functional sense, or just look cool?

- Artistic clarity and polish - Is it presentation-ready, or does it need human cleanup?

I briefly considered scoring "time to completion," but that idea lasted about ten seconds. Every AI delivered its final image in under a minute, which made the metric meaningless. Speed is the one category where AI already wins by a landslide.

For context, the Ghost baseline images took weeks to produce. That matters. When AI can pass a Turing test against a world-class medical illustration team, its speed alone will be enough to seriously disrupt this field. We're not pretending otherwise. It's only a matter of time before many medical illustrators are pushed into roles that look more like prompt writing and image verification than traditional illustration.

That reality isn't something we take lightly.

Best case scenario: We're genuinely surprised by how far the technology has come.

Worst case: We get a very clear reminder of the difference between "looks medical" and "is medical."

Let's find out.

Test 0: The Anatomy Poster Warm-Up (Or: Let's See If AI Can Even Handle The Easy Stuff)

Before jumping straight into the complex controlled tests, we decided to start with a warm-up. A very simple prompt. Something every image generator should theoretically excel at.

Every one of these models has been trained on anatomy diagrams. From public-domain Da Vinci drawings to modern textbook illustrations inspired by artists like Frank Netter. If an AI struggles here, that tells us a lot before we even introduce devices, pathology, or mechanisms of action.

Each bot was given the exact same prompt without modification.

Why Ghost and AIMAGE Sat This One Out

Ghost Human Baseline: No poster, on purpose.

This warm-up prompt is the kind of assignment AI loves, and human studios should refuse unless a client is paying for it. Ghost is a production shop with deadlines, reviews, and client priorities. Spending real artist hours to create a polished, information-dense anatomy poster purely for a benchmark would be an expensive distraction from billable work. That's the core advantage AI keeps pressing on: speed.

AI can generate a dozen poster variations in minutes, while a human team would need hours or days to do it properly, and then still go through the usual accuracy review cycle. That's exactly how AI starts winning the early rounds. It gets you something usable fast, even if it's not perfect. Once these tools reliably pass a "medical accuracy Turing test," where the output is indistinguishable from expert work to the non-expert viewer, most human artists won't be replaced outright. They'll be pushed upstream into higher leverage roles like creative direction, prompt and pipeline design, and downstream into quality control, fact-checking, and correction passes. In other words: fewer people making images from scratch, more people supervising, correcting, and approving.

AIMAGE: No poster because it's not trained for this format.

AIMAGE is not a general-purpose, do-anything image generator. It depends on Ghost's reference library and training data. If Ghost hasn't fed it thousands of examples of dense, labeled, textbook-style anatomy posters, then this specific assignment is outside its learned distribution. It might produce something poster-like, but it would be guesswork and style imitation rather than a confident reproduction of a familiar format.

That limitation is important, and it's also fair. Domain-tuned models like AIMAGE are usually excellent inside their lane and unremarkable outside it. So for this test, AIMAGE sat out because the goal was to stress-test general instruction following, layout discipline, and label behavior on a poster format that wasn't part of its training foundation.

So the warm-up results are "AI vs AI" by design. Not because Ghost couldn't make the poster, but because making it would miss the point. The whole reason this comparison matters is that AI can afford to do experiments humans can't justify doing.

Test 0 Results: Anatomy Poster Warm-Up

Quick Takeaways

- Gemini / Nano Banana (17/25) took the top spot in this warm-up because it balanced structure, legibility, and overall clarity. It still wasn't "textbook correct" in a strict sense, but it held together better than the rest.

- Sora (16/25) surprised me here. It followed the assignment cleanly and produced the most presentation-ready "poster" feel of the group, with fewer layout failures than expected.

- Flux 2 (15/25) looked solid and coherent, especially on general anatomical form, but it lost points on the label system. It was close to being great, but not consistently reliable.

- Grok (15/25) did well on overall concept and polish, but the labels were the weak point. It produced medical-looking content that would still need a human to correct and validate.

- Midjourney (13/25) still wins on aesthetics, but it continues to struggle with the exact thing this test is designed to punish: precise labeling and dependable anatomical correctness. It's excellent for concept exploration, but not trustworthy for "educational poster" output without significant human cleanup.

This warm-up doesn't tell us who's best at procedures, devices, or pathology. It tells us who can follow instructions under constraints, handle structured medical layouts, and avoid text hallucinations when the image is mostly labels and anatomy. That's why we start here.

Next up, we move to the first true Ghost baseline comparisons, where the models have to match real production-quality medical renders rather than just "poster style."

Test 1: Isolated Transcatheter Valve on White (The "Product Shot" Test)

This is the "product shot" test. If an image generator can't reliably produce a clean, accurate, photoreal device render on white, it's not ready for serious medtech workflows where device visuals must be:

- Unambiguous and precise - shape, proportions, materials, construction details

- Reusable across formats - web, decks, IFU-style visuals, sales sheets

- Easy to art-direct - consistent angles, lighting, clean background, repeatability

- Low risk - no anatomical hallucinations, no stray parts, no nonsense geometry

This test also isolates the generator's ability to follow instructions without leaning on "medical-looking" context. No blood, no tissue, no anatomy, no drama. Just the device, rendered correctly.

What We're Looking For

- Correct "TAVR-like" construction cues: metal lattice stent, fabric skirt, three symmetric leaflets, plausible commissure posts and top-edge wire detail

- High quality product-render lighting: controlled reflections, realistic materials, crisp edges

- Strict prompt adherence: isolated on pure white, centered, no labels, no extra objects

Test 2: Valve Deployed Inside Heart Cutaway

This test matters because it's where "medical-looking" usually collapses into "medically wrong."

A standalone device render can get away with approximate geometry. Once you place that device in the aortic annulus, you have constraints that are hard to fake:

- Anatomy and viewpoint: You asked for a surgical cutaway from inside the heart looking toward the aortic root, with specific lumen openings and thick, moist endocardial tissue.

- Device plausibility: A TAVR needs correct overall proportions, realistic stent lattice behavior, a believable skirt-tissue interface, and leaflets that look like leaflets, not random folds.

- Procedure cues: Catheter position, marker rings, and a continuous guidewire are subtle but important. When those are missing or nonsensical, it's a red flag that the model is guessing.

- Fit: If the valve would not physically seat at the annulus, it fails the clinical plausibility test even if the render is pretty.

Ghost is the target here because the prompt was written from the Ghost image. So Ghost is the gold standard for adherence, and by definition it gets full points.

Test 3: Rotator Cuff Tendon Repair on Humeral Head

This test matters because it's the exact kind of "looks simple, fails easily" medical render that separates real production-ready output from "medical-ish concept art."

What we're looking for is very specific, and very checkable:

- Correct anatomy and vantage: Proximal humerus, humeral head, and a visible cut cancellous bone surface at the greater tuberosity

- Correct construct: Two anchors seated in bone, two white pads, and two wide blue suture tapes crossing in an X to compress the tendon footprint

- Clean studio composition: Minimal white background with soft translucent abstract wave shapes, no labels, no UI clutter

Test 4: IV Catheter Hub with Labeled Callouts

This one is a stress test for the stuff that usually breaks medical image generators in production, even when the render looks "pretty":

- Hard constraints + exact text: Two labels, exact phrases, correct spelling, correct number of callouts, no extra text

- UI styling consistency: "Futuristic HUD" callouts with amber glow, micro UI details, leader lines, anchor points

- Transparent product realism: Clear plastic hub with believable internal geometry, ridges, reflections, and refraction, without turning into fantasy glass

- Clinical plausibility: Even if the anatomy is minimal, the insertion and scale still need to feel like an IV setup, not a random syringe in skin

- Production usefulness: Can you ship it with minor cleanup, or do you need a full re-build?

Test 5: Microcellular Healing Infographic

This test is about biological storytelling and infographic discipline: can the AI communicate a cellular healing progression across time, with correct labels, correct phases, and correct visual hierarchy?

Scoring categories for this test:

- Prompt match and layout compliance - frame, continuous band, left to right progression

- Header tabs and typography accuracy - exact wording, clean placement, no overlap

- Biological storytelling plausibility - RBCs, immune cells, fibroblasts, ECM, vessels

- Design clarity - readability, hierarchy, not visually cluttered

- Render quality - materials, lighting, depth, polish

The Verdict: We're Not Dead Yet

Let me be direct: none of these models are ready for serious medical accuracy.

At Ghost, we do use AI for generating images. But only for very rough concepting, inspiration, and fast color tests. No AI-generated images ever make their way into our final output that we sell to clients. Not because of some moral or ethical blockade, but because it isn't good enough to pass. We use AI to quickly show our clients ideas, rough ideas, or to show different versions of our work like changing out backgrounds or shaders or other aesthetic treatments to images. Once approved, we use the AI gen as reference and then we use our process and tools like Maya, Houdini, Substance, After Effects, etc. to make it the right way.

How AI wins today: Speed.Every AI delivered images in under a minute. Ghost baseline images took weeks. When these tools reliably pass a "medical accuracy Turing test," speed alone will seriously disrupt this field. We're not pretending otherwise.

Why Can AI Render Anime Perfectly But Fail at Basic Medical Applications?

It's all about training data.

In five years, Midjourney has output hundreds of millions of images of scantily clad videogame heroines and been scored by their prompters millions of times. This is how machine learning works. Meanwhile, it has only been prompted to make medically accurate art a handful of times in comparison, and when it outputs that art, only a fraction of the graders can actually determine whether or not it's accurate.

So while Grok has become quite competent at outputting meme images of liberal politicians looking afraid and crying, and Sora can put anyone in a plastic action figure bubble pack, medically accurate art won't be possible until the machines have been trained in roughly the same way.

What About AIMAGE?

Our own LoRA-trained model has been trained on thousands of images that we made ourselves. We've even gone as far as to render every vertebrae in every possible angle, painstakingly labeled and tagged structures. But if we ask it for something it hasn't yet been shown, like "show L4 with a herniated disc and a partial corpectomy," it will likely mess something up completely. The herniation ends up not even in the disk, there's no cancellous bone, and if that's a partial corpectomy I don't want to see what it thinks a full corpectomy looks like.

What AIMAGE is good at is handling prompts like "replace the bony anatomy for acrylic" or "change the background to an empty OR in the year 2099." That's how we currently use it: to show us different ways we could style the visual aspects of a client's output, not to accurately render anatomy.

AIMAGE: What It Gets Wrong vs. What It Gets Right

See the pattern? AIMAGE is excellent at restyling things it already knows. Ask it to invent something anatomically complex it hasn't been trained on? That's where it falls apart. Same story as all the other AI models, just with a narrower (but deeper) domain.

Cumulative Results Across All Tests

The Final Ranking

- Ghost (125/125): The control. This is what validated medical illustration looks like.

- AIMAGE (98/125): Tied for best AI. Domain training matters. When it has seen the object, it performs.

- Sora (98/125): Tied for best AI. Strong general-purpose renderer. Surprisingly competent at medical contexts.

- Flux 2 (75/125): Middle of the pack. Can look photoreal while being medically wrong.

- Nano Banana (62/125): Inconsistent. Sometimes solid, sometimes confused.

- Midjourney (54/125): Still the aesthetic champion. Still unreliable for anything requiring text discipline or medical accuracy.

- Grok (52/125): The meme machine is not ready for the OR.

The Future of Medical Illustration

I'm not sure how long it will be before AI can do what Ghost does well enough that people can't tell our work from the AI's output. Based on our tests today, we probably have a while.

But I'm not naive. The trajectory is clear:

- Fewer people making images from scratch

- More people supervising, correcting, and approving

- Artists pushed into higher-leverage roles like creative direction and prompt/pipeline design

- Downstream roles expanding: quality control, fact-checking, correction passes

AI does help us in many other ways already: render checking, project management, cleaning up models, textures, and shaders. It's a tool, and we use it.

Should You Pursue a Career in Medical Illustration?

Is your expensive and painstaking degree in Medical Illustration a waste of time? That's not for me to say. We'll always need people who know what medically accurate images need to be, even if AI ends up doing all the work. Your knowledge will always be necessary, even if your Maya and Photoshop skills aren't worth the many hours it would take for you to make something that AI can make in seconds.

Maybe the better question is: did I encourage my children to follow in my footsteps?

No. But largely because they have their own interests and talents, and everyone must follow their own path. When they asked for my honest opinion, I did sway them into careers that I thought were a bit less likely to become replaced by AI in the future. My son studied VR development at Ringling and is currently working as a pre-vis designer at GM making the latest model Corvette look cooler than it ever has. My daughter is studying to become a PA because she's more interested in working directly with the patient than my profession that makes the content that educates the patient and the practitioner.

For her, this is more real. For me, this is inefficient. "One patient at a time? That's gotta be the slowest way to help people ever." :P